Revolutionizing Robotics: Teaching Machines to Understand Their Boundaries

In the rapidly evolving realm of robotics, a significant breakthrough emerges from the prestigious Massachusetts Institute of Technology (MIT). The crux of this innovation lies in an ingenious method developed to ensure the safe execution of open-ended tasks by robots. By equipping these machines with the ability to discern their own limitations, researchers have opened new horizons in ensuring both the safety and efficacy of autonomous operations. This development is pivotal in an age where machines are increasingly interacting with human-centric environments.

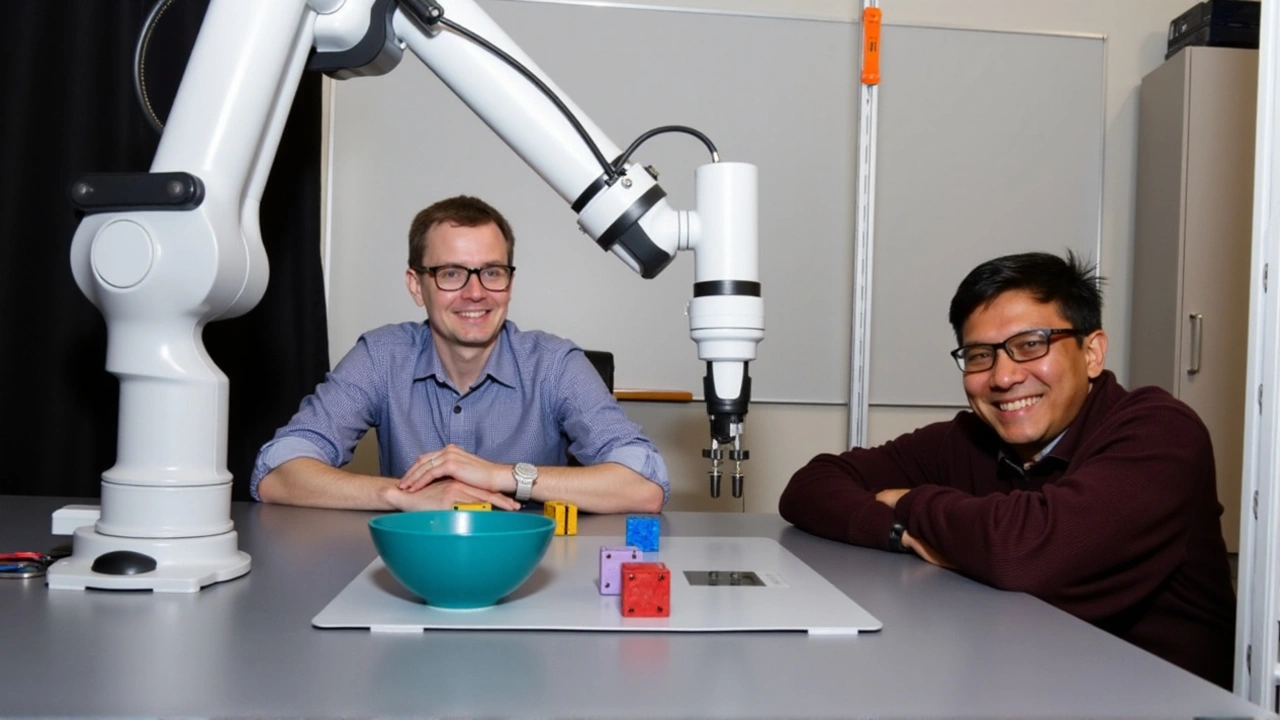

Leading this groundbreaking research is Kimia Hamidieh, a dedicated graduate student at MIT who has pioneered this method alongside her team. The core of their approach utilizes sophisticated vision models, enabling robots to better understand when they are uncertain of their surroundings or when they lack the necessary information to proceed with a task. This awareness is crucial, not merely for the success of task execution, but also for the vital safety aspect, as it helps prevent possible failures or mishaps.

Vision Models: The Heart of Machine Awareness

The essence of the team's strategy lies in the intertwining realms of computer vision and machine learning—a combination meticulously designed to train robots. This training involves teaching machines to pause and reassess situations when uncertainty arises. For instance, if a robot is tasked with navigating a complex environment or manipulating intricate objects, it can now autonomously decide whether to carry on, stop, or seek further information. This decision-making capability is especially relevant in dynamic settings, where actions need agile reassessment and determining the next step requires both precision and safety.

Hamidieh and her team have seamlessly demonstrated their approach across various complex scenarios, with successes evident especially in navigation and object manipulation tasks. Navigation, often fraught with unforeseen obstacles and unpredictable environments, poses a significant challenge for traditional robot path-planning algorithms. However, by adopting these advanced techniques, robots can adeptly detect potential risks and take steps to mitigate them before they evolve into problems. Such capability extends far beyond navigation, offering benefits wherever robot-user interaction is required.

Implications for Real-World Applications

Delving into the real-world implications, this new method holds considerable promise. As robots begin to seamlessly integrate into various facets of daily life, their roles transition from static, predefined tasks, to more dynamic, flexible functionalities. Whether aiding in household chores, assisting in healthcare settings, or performing complex industrial operations, the ability to understand and react to an uncertain environment becomes paramount. The robustness of this approach provides a dual advantage: enhancing the robot's capability range and ensuring human safety remains uncompromised in mixed environments.

Such advances assert that teaching machines to comprehend their operational limits is not only transformative but also necessary. By weaving safety into the code that propels these robots, we assure their reliability without resorting to constant supervision or frequent human intervention. This capability becomes a cornerstone in developing autonomous robots that function safely and independently in real-world applications.

Constructing a Safer Future with Machine Learning

Ultimately, the MIT team's contribution stretches beyond the academic realm. It opens doors for future research and commercial applications where customization and safety go hand-in-hand. The algorithms and techniques developed serve as a template for further exploration and refinement, inviting stakeholders and innovation leaders to build upon this foundation for a more integrated robotic future.

While the techniques need to be further optimized and tailored for various applications, the potential of this advancement is truly inspiring. The integration of such novel methodologies in robotics points towards a future where technology can be harmoniously interwoven with human activities, making life both richer and safer.

As we continue to make strides in teaching machines to know their boundaries, we take a step closer to realizing a world where human-robot interactions are common, seamless, and safeguarded. In a landscape where technological evolution is fast-paced and often uncharted, innovations like those from MIT not only enhance the capability of robots but place an unyielding focus on what really matters: safety and efficiency in our shared environments.

Posts Comments

Mitch Roberts December 14, 2024 AT 12:36

this is wild frfr robots now have self-doubt? lol i mean... kinda cool? they pause like me when i try to assemble ikea furniture without the manual

finally something that doesn't end in a robot knocking over a toddler

Mark Venema December 14, 2024 AT 13:36

This development represents a significant milestone in the field of autonomous systems. By embedding uncertainty awareness into robotic perception pipelines, MIT researchers have addressed a fundamental limitation in current reinforcement learning architectures. The vision-based confidence estimation framework enables probabilistic decision-making that aligns with human safety expectations. This approach could serve as a foundational module for future safety-critical deployments in healthcare, elder care, and public infrastructure.

Brian Walko December 15, 2024 AT 11:48

I'm really impressed by how this balances capability with caution. Too many robotics projects chase raw performance without thinking about the real world consequences. This isn't just about avoiding crashes-it's about building trust. When a robot hesitates instead of charging ahead blindly, people actually feel safer around it. That psychological component is just as important as the algorithm.

Derrek Wortham December 16, 2024 AT 10:00

This is why we can't have nice things. Now robots are going to start playing it safe so much they'll never do anything useful. What's next? A robot that refuses to pick up your socks because it's 'uncertain about fabric classification'? We're turning machines into overthinkers. We need bots that act, not bots that meditate on their existence before lifting a coffee cup.

Anjali Sati December 17, 2024 AT 02:27

Another overhyped paper. Vision models don't solve uncertainty they just add layers of computational noise. Real world environments need physical sensors not fancy neural nets that hallucinate confidence scores. Also why is the lead researcher still a grad student? This should have been a lab project 5 years ago.

Mitch Roberts December 18, 2024 AT 10:11

lol @2553 you sound like my uncle who says 'i built a radio in 1972 with a potato' but still can't use wifi

also @2552 i live in bangalore and my auto rickshaw driver doesn't pause for anything except traffic cops and snacks

this is different. this is like teaching a toddler to say 'i don't know' instead of just grabbing the cookie

Write a comment